This week we spent a lot of time going over the assignments built with ofxKinect, ofxOpenNI, and ofxFaceShift from last week. There were a few short bypaths into discussions on:

- Microsoft's tracking technique used for the Xbox and MS Kinect SDK ("Real-Time Human Pose Recognition in Parts from Single Depth Images")

- Hand and finger tracking, extending Microsoft's technique directly, or aided by lycra gloves, or using a new super-accurate algorithm from CVRL

- Detecting and generating silly walks, silliness as an unexpected deviation from the mean, and caricatures as exaggeration of deviation

We then discussed a host of examples that live in the Appropriating New Technologies repository related to 3d visualization. These examples demonstrate rendering point clouds, slices, voxels, meshes, with and without lighting, depth of field, and some other filmic techniques.

I also highly recommend checking out James George's RGBDToolkit for some nice realtime wireframe mesh with depth of field rendering.

James' code still needs to be broken out into a separate addon/example, right now it's deeply embedded within the RGBDToolkit application.

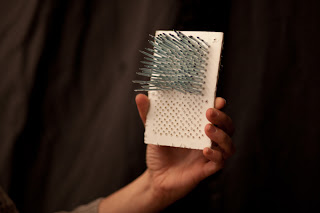

This week's assignment is: fabricate a physical 3d model. This means you have to take what was once "measurements" or "coordinates", and construct a physical object based on those values. This might mean folding some paper using papercraft techniques.

Or stringing lots of beads on wires:

Or printing slices of point clouds onto transparent sheets of material:

Or using slices of laser-cut cardboard:

Or even, yes, using a 3d printer such as a MakerBot with a Kinect, or the ZCorp printer at the AMS.

The goal is to become familiar with at least one technique for getting 3d data from the computer screen to the real world. You're encouraged, first, to build your own tools and be creative with the technique that you choose to manifest the 3d data. However, if you're not building your own tools for this assignment, then it should be clear from the quality, aesthetic, and concept behind the final object.

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)